If it were me, I would simply choose not to be on the wrong side of history.

Well, we did end up with a TACO truck on every corner, but not in the way we meant.

I think my favorite thing about leaked LLM system prompts is that they codify what programmers have been screaming at, demanding from, pleading with software to do for decades.

This sentiment could come from any time between Babbage and now: “For the love of all that is holy, please just goddamned work, just once! Do not give me the same error you have before! I do not care about that particular corner-case you are obsessed with, and I want you to just do as I fucking say!”

Only now, instead of landing as flecks of spittle on the monitor, it’s checked in and versioned.

A while back, one of my kids and his girlfriend and some mutual friends of theirs went to Las Vegas to celebrate her birthday over a long weekend. As young people are wont to do, they booked the crappiest hotel for the cheapest price, to have more money to hand directly to the casinos. I did exactly the same thing when I was their age.

But Vegas has changed, and fraying carpets and broken sinks are not the only terrors that away you on the low end anymore.

I have been a programmer for a very (very) (very) long time and it is my considered (and correct) opinion that the best software development methodology is not Agile or Waterfall or Spiral or Scrum or Kanban or Rapid Application Development or Feature-Driven Development or Test-Driven Development or Extreme or Lean or Joint Application Development or any of a couple of dozen others produced by people with books to sell and seminars to schedule.

The best software development methodology consists of one person with a list of things to do in a text file. If a piece of software cannot be development via this method, it should not be developed at all. Such things have only gotten us in trouble.

In very, very rare circumstances — operating systems, the space program, any AAA video game I like — the second-best software development methodology is allowed. This consists of a team of four or five people who share the text file and go out to lunch together every day but don’t talk about work.

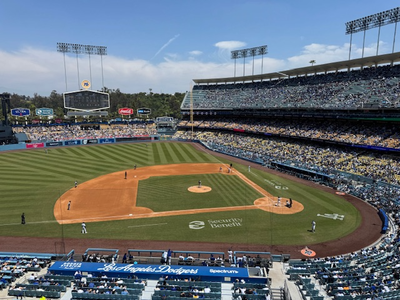

We are — as all good people are — Dodgers fans, and so we get taken out to the ballgame, and we root, root, root the home team, and if they don’t win it’s a shame, and then we go get milkshakes.

I can’t tell you how we started doing this — other than a vague sense that there should always be milkshakes — but I do know that any time a game ends, we take the Academy exit, turn onto Stadium Way, and then merge with Riverside. A couple of miles down the road, at the intersection with Fletcher, there’s Rick’s.

Rick’s is… an institution. That’s a polite way of saying it’s got three and a half stars on Yelp. I’ve never actually eaten their food, but the shakes are good and it’s got a greasy-spoon charm and they’re open after games and it is an institution.

During the pandemic, they put “SPAGHETTI IS BACK” on their marquee, and it went viral, because we were all kind of nuts in 2021.

Yesterday, as a buddy and I were driving-through to get milkshakes — Dodgers: 12, Marlins: 7, in a game that had more bad fielding than I’ve seen before in my life, combined — the sign had this:

(Yes, I know it’s a bad photo. My windshield was so dirty, the phone focused on that instead of the actual sign. Leave me alone.)

It said, “HAPPY BDAY CRASHOVERRIDE”. Which, no, it couldn’t possibly have.

“Crash Override” is the hero from the much-beloved and genuinely bad 1995 nerd movie “Hackers,” which posits that technically proficient people look like Jonny Lee Miller and Angelina Jolie in their mid-20s.

How? Why? What?

Maybe it was the release date? No, “Hackers” came out on September 15. Miller’s birthday? No, that’s November 15. Maybe they filmed some of the movie at Rick’s? No, it was shot in New York. Maybe they meant “HAPPY BIDET CRASHOVERRIDE”? I don’t know.

And so I committed the smallest act of journalism possible, which should qualify me for a Pulitzer given how things are going: I called the restaurant.

I said, “This is a weird question, but I came by last night after the game and saw the marquee said ‘HAPPY BDAY CRASHOVERRIDE’ and I was wondering if you knew why?”

And the poor woman who happened to be standing closest to the phone when it rang said: “I don’t know. The customers write stuff down and we put it up and we don’t know what it means.” Which, I admit, is disappointing and leaves me with no ending to this story.

Good milkshakes, though.

My son calls the achy-muscled, sticky-mouthed aftermath of falling asleep on the sofa while watching TV “waking up on Roku Road.”

Atom Feed

Atom Feed